Remember when Elon Musk made Bitcoin crash by tweeting that Tesla would stop accepting it due to environmental concerns, and everyone was worried about the environmental impact of proof-of-work mining? That was in 2021, and degens haven’t forgotten.

Yet today, Musk’s xAI is building what might be the world’s largest AI supercluster, with governments rushing to create laws to boost AI innovation—while hardly anyone is questioning the energy consumption.

A new peer-reviewed research paper published in the scientific magazine Joule revealed that artificial intelligence could account for up to 49% of global data center electricity usage by the end of 2025—surpassing even Bitcoin’s notorious energy appetite.

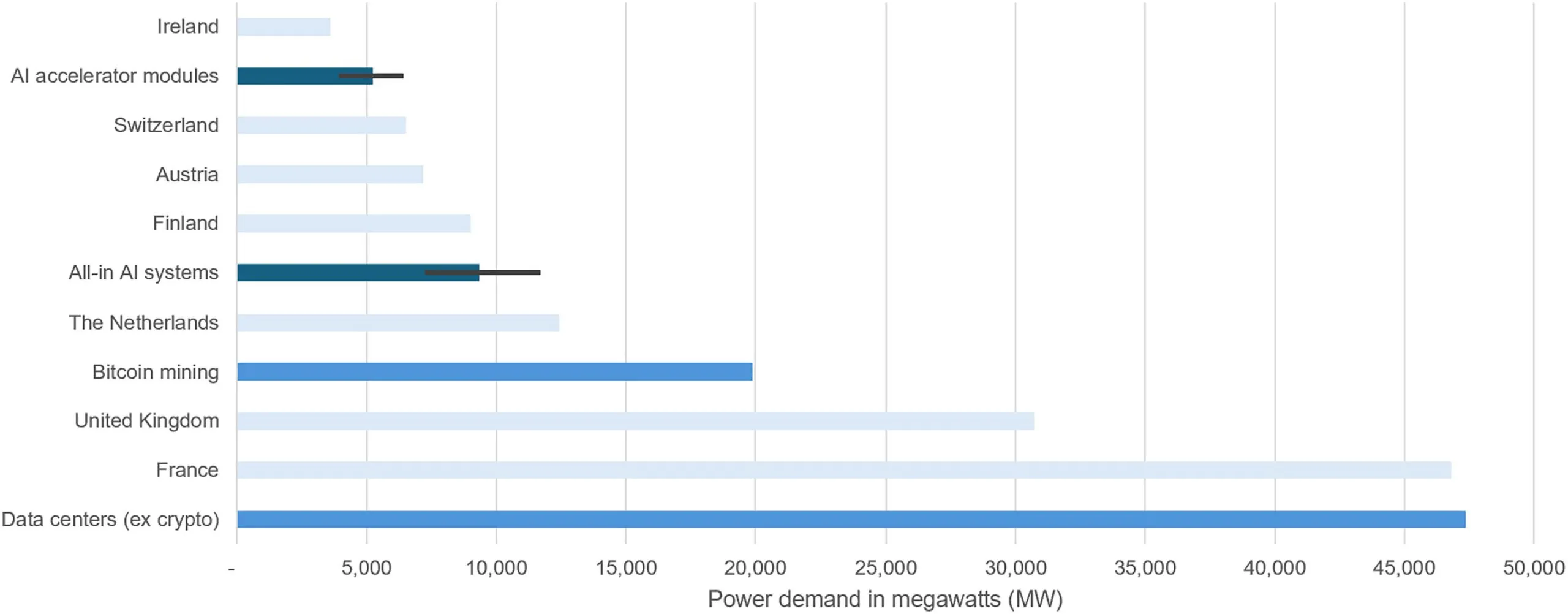

Alex de Vries-Gao, a PhD candidate at Vrije Universiteit Amsterdam and longtime Bitcoin energy consumption critic, found AI’s power demand could hit 23 gigawatts by January 1, equivalent to about 201 terawatt-hours annually. Bitcoin currently consumes around 176 TWh per year.

Image: Joule

“Big tech companies are well aware of this trend, as companies such as Google even mention having faced a ‘power capacity crisis’ in their efforts to expand data center capacity,” de Vries-Gao wrote on LinkedIn. “At the same time, these companies prefer not to talk about the numbers involved.”

“Since ChatGPT kicked off the AI hype, we’ve never seen anything like this again,” he added. “As a result, it remains virtually impossible to gain a good insight into the actual energy consumption of AI.”

Unlike Bitcoin’s transparent energy consumption, which anyone can calculate from the network hash rate, AI’s power hunger is deliberately opaque. Companies such as Microsoft and Google reported increasing electricity consumption and carbon emissions in their 2024 environmental reports, citing AI as the main driver of this growth. However, these companies only provide metrics for their data centers in total, without specifically breaking out AI consumption.

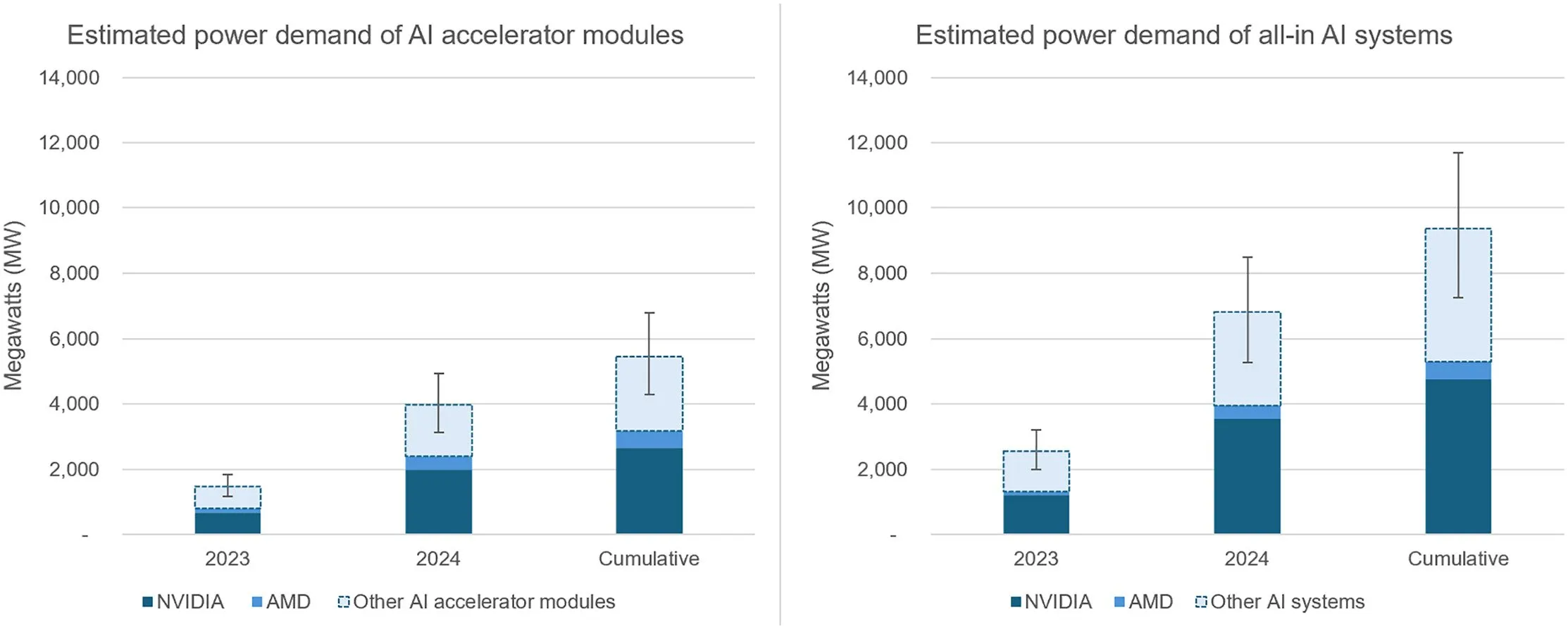

Since the tech giants refused to disclose AI-specific energy data, de Vries-Gao followed the chips. He tracked Taiwan Semiconductor Manufacturing Company’s chip packaging capacity, since virtually every advanced AI chip requires its technology.

The math, de Vries-Gao explained, works like a business card analogy. If you know how many cards fit on a sheet and how many sheets the printer can handle, then you can calculate total production. De Vries-Gao applied this logic to semiconductors, analyzing earnings calls where TSMC executives admitted to “very tight capacity” and being unable to “fulfill 100% of what customers needed.”

His findings: Nvidia alone used an estimated 44% and 48% of TSMC’s CoWoS capacity in 2023 and 2024, respectively. With AMD taking another slice, these two companies could produce enough AI chips to consume 3.8 GW of power before even considering other manufacturers.

Image: Joule

De Vries-Gao’s projection showed AI hitting 23 GW by end of 2025, assuming no additional production growth. TSMC has already confirmed plans to double its CoWoS capacity again in 2025.

Power demand is unlikely to slow down. Nvidia and AMD announced record revenue, while OpenAI announced Stargate, a $500 billion data center venture. Indeed, AI is the most profitable business in the tech industry, with any of the top three tech companies in the world surpassing the total market capitalization of the entire $3.4 trillion crypto ecosystem.

So the environment will probably have to wait.

Leave a Reply